The IoT story

Since the internet of things (IoT) is very popular nowadays, this is a great opportunity to share some interesting experiences of testing complex IoT systems and devices.

The idea of having all the devices around us wirelessly connected has been alive for almost a century. It was one of Nikola Tesla’s visions of the future. The problem which kept this idea from coming to life is the cost of hardware needed to upgrade the devices to be connected to the Internet. With IPv6 in existence, every atom on the Earth can be assigned an IP address and that can be done with 100 more planets, as Steve Leibson defined. So, the new rule of the future will be “anything that can be connected will be connected”.

Some of the examples of the IoT systems are smart homes, wearables, smart cities (from traffic management to water distribution, to waste management, urban security, and environmental monitoring), industrial internet (manufacturing, logistics, oil and gas, transportation, energy/utilities, mining and metals, aviation), connected cars, connected health, smart retail, smart supply chains, and smart farming. Devices that are used in IoT systems are widely ranging from simple sensors to very complex devices such as cars, vending machines, smartwatches, traffic lights, humans, animals…

What is context-driven testing?

IoT systems are complex environments formed by different types of sensors and devices, different types of communication protocols and applications who used different types of data. The main challenge is how to test this complex architecture, what test procedures and good test practices can be used in testing complex IoT solutions. Context-driven testing school of thought represents the answer, as it promotes good practices in context.

The context-driven school of testing was declared on the 21st of November 1999 by James Bach, Cem Kaner, Brian Marick and Bret Pettichord (with the support of other colleagues).

Context-driven testing is a human-centered approach. People with different skill-sets and knowledge who are working in a complementary team are one of the greatest value for the project.

Working on a project, judgment and skill are always accumulated and re-evaluated against the project context.

There are good practices in a specific context, but there are no best practices. Good practices are always re-evaluated since projects unfold over time in a way that is often not predictable. In one situation a good practice can give great results but over time, as the context of the project changes, that practice may not bring the anticipated results anymore. The context-driven test approach puts focus on awareness to do the right things at the right time and to test products effectively.

The product is a solution to a problem. If the product doesn’t solve the problem, it simply does not work. In the context-driven test approach, the focus is on the context of the product as a solution.

Why do we need context-driven testing?

The value of context-driven testing is the way testing is organized around the product context.

There are two products (picture above), one is a smartwatch for measuring steps, heart rate, activity, etc and second is a medical infusion pump for monitoring body fluids and infusing drugs in patients. Both products measure body parameters but the context in which those two products are used is different.

Medical infusion pumps are used in medical care and hospitals. Smartwatches are used in everyday life. Test practices that we use for testing the smartwatch could not be used as good practices for testing the medical infusion pump and vice versa.

For testing the smartwatch, the focus would be on functionality, usability, connectivity, etc. For testing medical infusion pump focus would be on testing security, standardization protocols, etc. In conclusion, context-driven testing puts product context first, around which all suitable test practices are organized.

How do we use context-driven testing?

Information in context

In the context-driven test approach, the main purpose of testing is to provide information to different stakeholders. The context of the information is not the same for all stakeholders. For example, the test report for the management is usually not the same as the test report for the clients.

“X” shaped tester

Testers should possess a different set of skills and knowledge. Other than good technical knowledge, testers should have good management and communication skills in order to be an advocate for different stakeholders and to put the information in the right context. Of course, one tester doesn’t know everything so the focus should be on a complementary team that covers all necessary knowledge and skills.

Role-playing

Since the product can be used by different types of users, testers should simulate the behavior of those users by role-playing while testing the product. This way, product context would be evaluated in the right way.

Session-based testing

Session-based testing is the main focus of the context-driven test approach since a lot of the important problems can be found following test ideas and improvising during testing. Basically, session-based testing is an exploratory testing activity recommended to be organized in sessions with a specific time frame (see session-based test management by James and Jon Bach) in order for testers to have focus during testing.

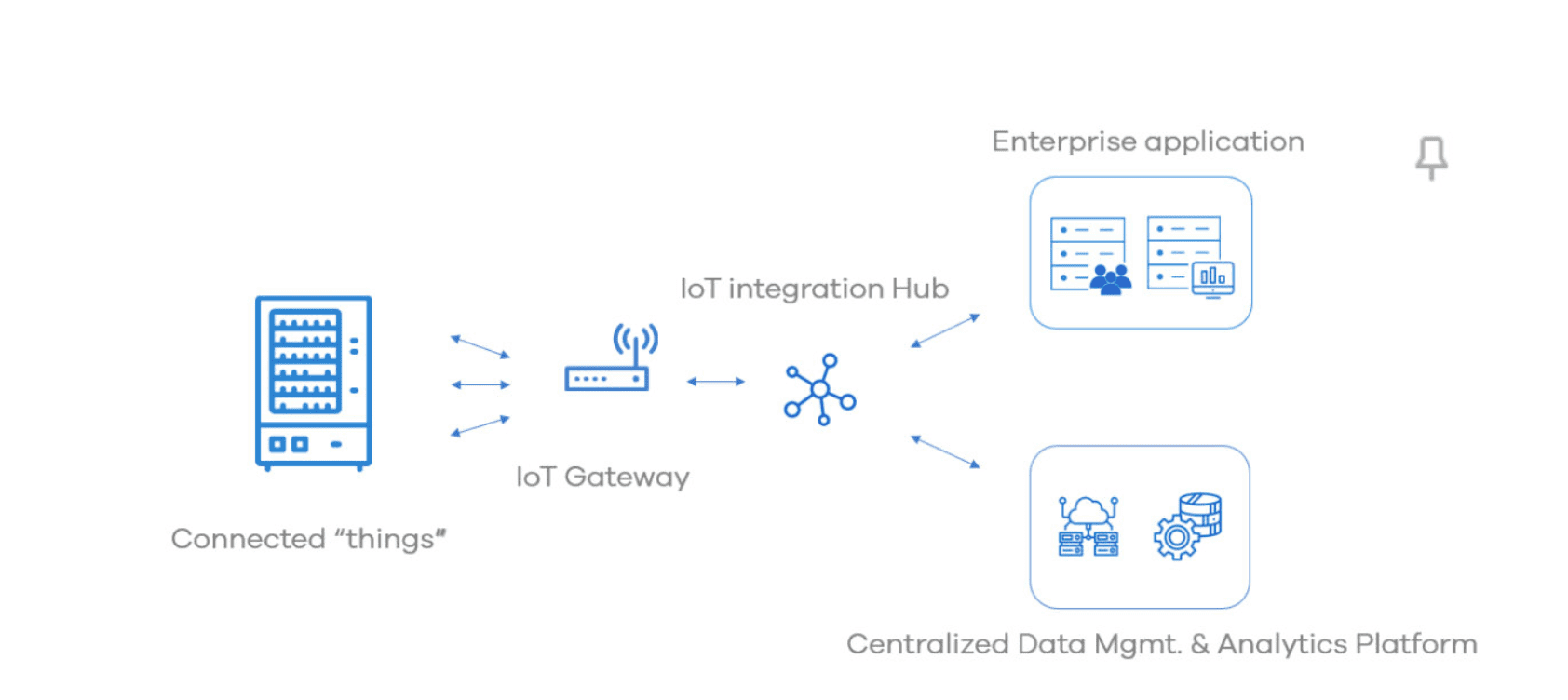

The intelligent vending machine as a complex IoT device

Let’s look at an example of a smart vending machine as an IoT device.

An intelligent vending machine has a mini PC inside that drives the software, a big touch screen with the sales application, and part of the software which detects demographic profiles of the users (e.g. adult male, adult female, senior male, etc). All the hardware devices and sensors are connected to the mini PC and can communicate with the software. The machine is always online and can send all the data (e.g. current state of the inventory, state of hardware devices inside, transaction details, user interactions, etc) to the backend system. All of that data is used to create one business intelligence model and lead to a smart retail solution.

How do we cope with testing challenges?

Testing the software with the hardware is very sensitive and it needs different kinds of testing skills.

Testing as an R&D process

Testing has been included in the developing process of this product from the beginning. It is an important part of the R&D process. While searching for the perfect mini PC for the machine, we conducted some performance testing on numerous PCs to evaluate the behavior of the CPU consumption, the heating of the PC and the performance of the sales application while the PC is connected to all the peripheral devices inside the machine. The degradation testing of the hardware devices was a way for the software to learn how to cope with hardware issues. For example, the test when the coin is jammed inside the coin payment device taught the software how to continue working after this situation had happened on the field. Testing the coin device as a payment device can also be very interesting. Situations such as device disconnected from the integration board,

different coin sizes and denominations, coins jammed inside the insertion unit and dispensing unit, are all very valuable when creating a test plan for degradation testing of the payment process of the machine.

Hardware dependency

In order to make sure that the testing will be performed correctly, all of the hardware components (e.g. cables, sensors, devices, etc) need to be in the proper state prior to starting the testing. The order of action when investigating one issue found must start with the verification of all the hardware inside the machine. For example, if coins are not accepted in the machine, it can happen that the coin payment device is in an incorrect state and that the issue is not in the sales application software. So first, we need to remove all the possibilities that the hardware parts are corrupted and after that report an issue to the software development team.

Limited resources

Since the mini PC has limitations in terms of

processing power and the software for facial recognition is working offline and needs a lot of processing power, we need to monitor the CPU consumption while testing the sales application.

Network interruptions

The internet connectivity performance can depend on different hardware implementations (e.g. Wi-Fi module, 3G, 4G, LAN, etc) and the location of the vending machine (e.g. the metro station below the ground, office in the building with walls as Faraday’s cage, etc). Our tests need to cover network interruptions of the machine in every moment of the software lifetime (e.g. during the product purchase, while sending events from the machine, while receiving new media (images, videos) on the machine, etc).

Different types of users of the system

Role-playing is very important in the testing of our system. There are three different parts of our system; the sales application (used by end consumers of the products inside the machine), the maintenance application (used by technicians that maintain and refill the machines) and the backend portal (used by operators in the office and the business people). In order to make sure that the needs of those users are satisfied with this software solutions, we need to approach the testing with certain stories such as:

Sales application users can be kids who like to play with the touch screen (the software needs to support lots of user interactions with the touch screen), people who are in a hurry, waiting for the train at the train station (transactions need to be very fast), elderly people who are afraid of new technologies (transactions need to be very simple), etc.

Maintenance application users can be people who refill the machines and who do not need to know anything about the software, so the application must be very simple, such as entering just a few codes.

Backendportaluserscanbeofficepeoplewhomonitorthestateofmachinesonthestreets(the portal needs to have real-time information about the state of hardware inside the machine and the state of the inventory; all the proper data need to be available on the portal all the time), business people (they need to be able to get all the sales information and different relative statistics from the machines, and all of this data needs to be accurate and properly filtered).

Security issues

Since the machines are standing 24/7 unattended in public, they need to be on a certain level of security. Regarding the hardware, the machines have locks and security glass. All of the communication with the backend system and the cloud is encrypted. But sometimes, people think of different ways to get their products for free. There was one situation shared by a customer in production. When the people who refill the machines opened the door, they found a cat inside. Someone inserted the cat in the output tray of the machine and the cat jumped onto the plate with products. While the sound that its claws made while she was playing around with the snacks inside the machine amused her, several products fell down in the output tray and the customer got his products for free.

All of those different aspects of hardware and software functioning together, demand from the tester’s mind to always be broadened and search for new approaches, while constantly asking the question “What if…?

Future challenges of testing IoT solutions

In the future, testing the complex IoT solutions will bring many opportunities regarding developing new test procedures and incorporating IoT technology in testing processes and testing tools. But also, the future brings many challenges regarding standardization in IoT testing, test automation, testing scope, and connectivity challenges.

Standardization

Standardization in testing IoT solutions is still in its infancy. There are no test methodologies to follow, only good practices. In a way, it is a good thing because it gives flexibility in testing and the opportunity to try different test practices. On the other side, standardization could give a starting point and a guide on how to implement test procedures and good practices in different projects.

Test scope

Defining the testing scope for IoT systems with maximum possible coverage will be a challenge in the future. A possible solution is to focus on the end-users and how they use IoT systems. That information could be used to create test coverage that covers the necessary functionalities of IoT systems.

Connectivity

Without connectivity, there are no IoT systems and the main challenge in the future will be testing different types of connectivity, different signal strength, and different communication protocols. The solution to that challenge is to continue using network simulators for simulating different network conditions and network signals.

Automation

Since IoT systems include hardware and software, automation will be the greatest challenge for this type of system. Today, we have automation frameworks that can help testers, but those can automate only the software part of IoT systems. Automating hardware and human interaction will demand to the creation of automation platforms that include hardware simulators running together with automated scripts.

Article authors - Milena Lazarevic

Milena Lazarevic is head of testing in a young software development company called Invenda based in Novi Sad. Connect with Milena via LinkedIn - https://www.linkedin.com/in/milena-lazarevic-62244650/

Co-author - Dejan Nikolic

Dejan Nikolici s Test developer at Invenda Solutions. Connect with Dejan via LinkedIn - https://www.linkedin.com/in/dejan-nikolic-7147a225/