What’s the deal with ” Chatbots?

Soumya Mukherjee shares useful insights and tips to do it right. Read on to know more…

QA has always been a specialized job, though not many people believe so, but it is for sure and has been proven all these years. The best of the developers cannot test and either they leave critical scenarios, or they leave testing the integration scenarios. To supplement the process and to ensure quality QA has been an utmost need of the hour these days for the products. All the QA in the world is specialized and to prove it we will see how challenging it is to make sure the chatbot works perfectly as it requires a lot of understanding on how a chatbot works its internals, various stack, algorithms, etc., and then generate scenarios to test the same.

However, if you do not know all that is mentioned above you can now see what’s coming next, to develop an understanding of what things are required to make sure the chatbot is effectively tested. My expertise lies in automation and there are some questions that I will ask at the end of this article to make the reader think as to how we can achieve such things as they do not exist in the tools and are simple things that ensure maximum quality.

In today’s chatbot QA most of the testing is a black box and is manual in the industry which the testers test the:

Tedious conversation flow of the users Test small talk scenarios like Do you like people? or Do you know a joke? Fallback checks if the chatbot can handle what it cannot handle Integrations with APIs, Databases, Voice-based services, etc.

Moreover, the testers do not even know how the model works as the model engineers develop the underlying model and there is no further monitoring done by the QA. There is an analytics tool available to monitor but it needs technical expertise for the QA and QA needs to understand the internals. The result 90% of the time the bot breaks and no one understands when it will break, most of the time the bot is stuck.

Before looking into the remediation let’s see some more issues that face in doing the chatbot QA.

- One of the key issues is about continuous story creation as and when the bots are evolving. The bots are meant to converse like humans and there are limitations in QA to create or gather human behavioural data.

- There are no tools to manage the story coverage which means that the testers do not even recognize that the stories are being missed and it keep on testing on the redundant data set.

- The training data may not correspond to the new stories (end to end user chat sequence with the bot) which lead to the stale data being used each time. Most of the time the production data is never used to train the models leading to inaccurate results and faltering of the bot.

- Most of the automation tools only offer record and playback and they keep on testing the same set of stories making it a pesticide paradox challenge which makes the bot always fail for the newer set of data and scenarios.

- In most cases, the stories are written in a text file and no automation tool can read through the existing story list and bring that intelligence into the tool.

- There is a common problem in QA which is the absence of a central dashboard to check. From a chatbot context there is no generic dashboard that can trap the:

- Intent matching

- Check slots if they are filled as part of Entity testing • Entity validation

- Check the confidence score of the model as when a test is executed

- Confusion matrix which records the values of precision, recall, and F1-score

There is no easy way to reset the bot and if that is stuck it is stuck during the conversation

Multilingual bot QA is a challenge and companies have to build two separate bots most of the times

Folks who dig deeper also find that higher the confidence score in all circumstances the bot is going to predict the same thing for multiple intents, and it will always predict with the one with the highest confidence score.

So, the question is how to make sure that the bot never breaks? Here are some ways to test your bot effectively:

- It is highly important to refresh the training data with the production data. If this is not done, then the bot will always be training on stale and out of context data for the evolving scenarios

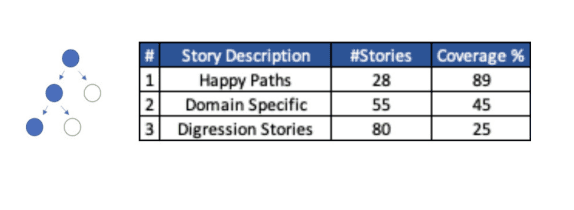

- The QA needs to always create scenarios containing the happy path, contextual questions, digressions, domain-specific questions & stateless conversations

- QA needs to verify whether the entities are mapped for the scenarios. For example, if the scenario for school fees is considered the entities get wrongly mapped for bus fee or tuition fee, or any other fees

- Automated tests mostly API should consume all the stories and run them each time as part of the regression testing. The stories can be consumed from either directly from the stories file (Stories.md file in Rasa) or a repository where it is stored

- Story coverage visualization should always be part of the execution, which effectively shows how we are progressing with the testing and whether the tests can reach 100% story coverage. Although there are no story coverage tools available a graph database like neo4j can be implemented to store the stories and then the nodes can be traversed each time to run the stories against the BOT.

Lorem ipsum dolor sit amet, consectetur adipiscing elit. Ut elit tellus, luctus nec ullamcorper mattis, pulvinar dapibus leo.

Most of the companies do not use Bot emulation platforms for manual testing but tools like RasaX, BotFront can be used to visualize the execution even when the bot is in development

One of the things which is very important, and which is to check the model accuracy with each conversation and then create a pattern to understand the rise and fall of the model along with:

- Confidence score

- Confusion Matrix including precision, recall, and f1-score

- Cumulative accuracy profile

- Cross-Validation results

- Exhaustive testing which checks bot resiliency is required to be part of the QA plan

- Integration checks with external database, services and most importantly all the webhooks are required to the part of the QA plan as well

- Fault tolerance testing by performing performance testing to verify bot response times, session management is required to be done. Most of the time during large volumes the bot response increased to a non-acceptance level and the session gets overridden which makes the entire bot infrastructure collapsed

- In case of live assistance, the handshaking and transfer of the flow needed to be checked as well

- One of the important aspects which the QA neglects is to perform various types of security testing. It has been seen that bots during a security assessment revealed a lot of information about user data that needs to be checked. Hence a level of security analysis on the APIs is needed to be performed along with that typing speed check, punctuations, and typo errors need to be checked.

Along with the above, there are a few other KPIs that are required to be tracked which are:

- Activity volume

- Bounce rate

- Retention rate Open sessions count

- Session times (conversation lengths)

- Switching of multiple stories in times of large conversations

- Goal completion rate

- User feedback (will be helpful for sentiment analysis as well)

- Fallback rate (confusion rate, reset rate, and the human takeover rate)

I am now sure that you would concur that chatbot testing requires much more effort to make it a specialized QA practice. With the set of standard practices, you can do much more effective testing on chatbots. In the later articles on this series, I will also discuss how you can make a story coverage tool for the chatbot and also, I would discuss some fancy automation tools which are there in the market and how it can be used to “EFFECTIVELY” drive your test automation for the “CHATBOT”.

If you have any questions, feel free to reach out to me on Twitter (@QASoumya) or LinkedIn.com/in/mukherjeesoumya

Soumya Mukherjee

A passionate tester but a developer at heart. Having extensive experience of a decade and a half, doing smart automation with various tools and tech stack, developed products for QA, running large QA transformation programs, applied machine learning concepts in QA, reduced cycle time for organizations with effective use of resources, and passionate working in applied reliability engineering. Love to help others, solve complex problems, and passionate to share experience & success stories with folks. Authored books on selenium published by Tata McGraw-Hill’s & Amazon. A father of a lovely daughter.