In this article, I’ll share the story of how my understanding of testability has evolved over the last fifteen years. Hopefully, once you’ve read the post you’ll share my enthusiasm for all things testability. I’ve discovered that a whole team focus on testability is one of few levers in software delivery that can provide a positive impact on a team’s productivity.

Discovering that developers can make software easier to test

Like many of the lessons I’ve learned in my career I discovered the value of testability by chance rather than design.

As a junior tester, I found myself working on an R&D project building the world’s first camera safety system. It was a fascinating project that presented me with a host of different testing challenges. It was a complex system consisting of visual sensors, a physical control unit and a desktop application for configuring and controlling the safety system in an industrial environment.

Of all the challenges I faced the single most frustrating problem was trying to reproduce crashes in the application. The application allowed safety engineers to monitor industrial environments so that when workers approached dangerous machinery it would detect their presence and slow down or stop. The application required a sophisticated graphical user interface that allowed safety engineers to interact with representations of the environment.

An unfortunate side effect of this sophistication was a persistent stream of application crashes triggered by even subtle interactions. The safety-critical nature of the system meant I had to investigate and replicate every single crash that we observed. Each crash took hours or even days to replicate as often both the order and location of user clicks were critical.

One day after encountering yet another crash in the application I decided to pair with one of the developers in order to replicate the issue. As usual, we followed the steps I recalled completing before the application crashed. We repeated the steps time and time again introducing slight variations each time. Eventually, after hours of back and forth, we replicated the issue… success at last. Having replicated the crash the developer quickly isolated the cause and fixed the issue. That’s where the story gets interesting.

Irked by having wasted hours trying to replicate this issue the developer suggested that he add a flag to the application that would record all interactions and write them out to a file. Two hours later his changes were in place and it was ready for use.

From that point forward those hard to replicate issues became a thing of the past. Whenever I experienced a crash I retrieved the contents of the log output into a bug report and the developers could replicate it easily.

This experience taught me two valuable lessons:

- The first being that we need to share the challenges we each face in each of our roles as a team. In this case, the developers had not felt the pain of trying to replicate crashes themselves and therefore did not realise this was such a huge problem. From my perspective, I had no idea that this problem could be solved so easily. Being problem solvers when we expose the developers to a problem they naturally look for a solution.

- The second lesson I learned was that a relatively meagre investment in Testability can yield a hugely disproportionate impact on the testing effort. In this case, a couple of hours of coding saved me countless hours of trying to replicate crashes.

Deliberately designing software with testing in mind

This set a seed in my mind that lay dormant for a few years until I again found myself in a situation where doing great testing seemed almost an impossibility. I started working as a test automation engineer in a newly established software engineering department for a hardware company. This was the companies first time building a software product and after positive feedback from the market, we were tasked with building upon their initial proof of concept.

As part of onboarding, all 35 people in the new engineering department were tasked with regression testing the software for a forthcoming release. Everyone in the engineering department got involved. After seven weeks of tortuous regression testing, the release finally limped out the door.

This regression testing exposed the team to testing challenges that made the whole experience exhausting. The test automation in place was so unreliable it had to be disregarded. We had to test everything manually including checking thousands of product attributes via a REST API. When we did find and fix issues it often introduced new bugs in unrelated areas of the product. The highly coupled nature of the code meant that we had to run regression cycles for every change we made. This resulted in people working late nights and weekends. Within a week of the release, numerous issues were reported by customers and morale in the engineering department plummeted.

Once the dust had settled, the software architect came to my desk and asked me a profound question “How can we make this system easier to test?”. We talked through the testing challenges we had encountered. We formulated an approach to design our first component with testability as a primary concern. I use the mnemonic CODS to describe the design approach we adopted. Each letter represents a design attribute to consider when designing a ~ system with testability in mind.

- Controllability – The ability to control the system in order to visit each important state.

- Observability – The ability to observe everything important in the system.

- Decomposability – The ability to decompose the system into independently testable components.

- Simplicity – How easy the system is to understand.

We decomposed the new component into a single, independently testable service. We added control points that allowed us to manipulate state and added observation points that allowed us to see the inner workings of the service. Within twelve months of introducing this new approach we revolutionised the way the whole software organisation delivered software. Painful regression testing cycles were a thing of the past as we now had robust reliable automation that provided almost immediate feedback on every change. This freed up our testers to do deep, valuable testing. Teams were working at a sustainable pace and delivering better software faster.

The transformation was staggering and convinced me that testability was the key to moving at speed without compromising quality. I also realised that the huge quality improvements weren’t driven by testing. They were driven by building relationships and influencing the right people at the right time to build quality.

I began consuming everything I could find on the subject of testability. I studied the content and models of James Bach, Michael Bolton, AnneMarie Charret, Maria Kedemo, Ash Winter and Bob Binder to mention but a few. My CODS mnemonic helped influence the testability of the product itself but not the environment in which the testing was performed.

Helping teams capture their testing debt

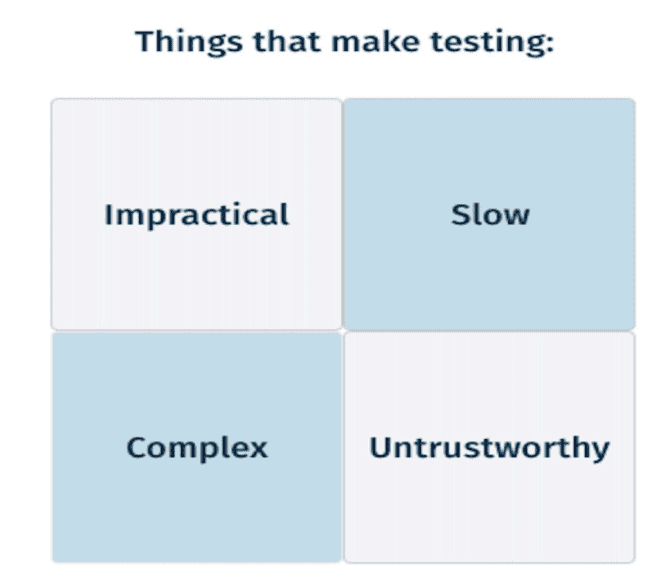

Around this time I began coaching development teams to improve their testing practices. I created a simple model called the testing debt quadrant to help teams capture their testing debt. Testing debt occurs when a team chooses an option that yields benefit in the short term but results in accrued testing cost in terms of time, effort or risk in the longer term. In other words, testing debt is the manifestation of a lack of testability.

The exercise requires the whole team to capture details of anything that makes their testing activities impractical, slow, complex or untrustworthy.

This exercise is a simple way of understanding the unique testing challenges each team faces. It provides a great starting point for discussing and addressing testability.

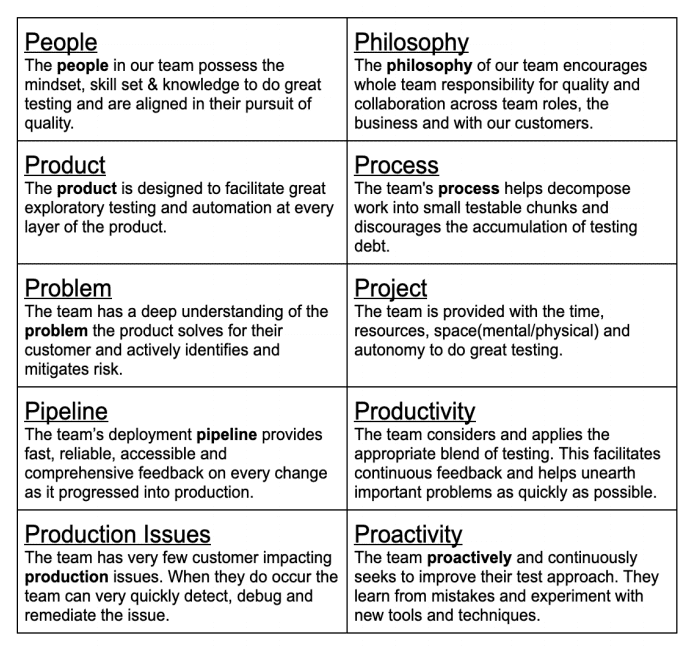

The 10 P’s of testability

The more I worked with teams the more the sources of testing debt began to appear. It was apparent there was a common set of factors that influence a team’s testing experience. I called this model the 10 Ps of Testability. The 10 Ps provide a lens through which teams can self assess their testing experience. By viewing the team testing experience through each of these lens teams can better determine where they need to invest their improvement efforts.

The 10 Ps has proven to be a hugely versatile model. I’ve used it as a quarterly testability health check for development teams. I have used it to create career development plans for testers. Whenever I have to think about a testing-related problem I find myself inevitably thinking through the 10 Ps.

Why do cars have brakes?

I’ll finish up with an analogy. Consider for a moment why cars have brakes? Is it to slow us down? Is it to keep us safe? These answers are correct but ultimately cars have broken so that they can go fast. Today’s software development teams are moving faster than ever. Our teams need effective and efficient brakes, we need great testability. Without reliable testing to slow us down when we’re in danger we’re likely to crash headfirst into a wall.

So to summarise encourage the whole team to talk about their testing experience. Use the models described to identify and understand your testing challenges and work together to overcome those challenges as a team.

Rob Meaney

Rob Meaney is an engineer that loves tough software delivery problems. He works with people to cultivate an environment where quality software emerges via happy, productive teams. When it comes to testing Rob has a deep and passionate interest in Quality Engineering Test Coaching, Testability, and Testing in Production. Currently, he’s working as Director of Engineering for Glofox in Cork, Ireland. Rob is co-author of “A team guide to testability” with Ash Winter, a keynote speaker and cofounder of Ministry of Test Cork. Previously he has held positions as Head of Testing, Test Manager, Automation Architect and Test Engineer with companies of varying sizes, from large multinationals like Intel, Ericsson & EMC to early-stage startups like Trustev.