In my experience, by far the biggest risk to the successful implementation of Exploratory Testing is the complete m also trying it for the first time.

Three years ago I was the first test manager in my company (to my knowledge) to formally introduce Exploratory Testing (ET) as a phase in a testing project. I easily articulated some of the benefits it would bring to the project. Earlier testing = earlier clarification of requirements, not to mention earlier, and therefore cheaper defect detection. I was able to deliver these benefits to the project, but my lack of true comprehension with regards to the definition of ET meant I did not fully understand how to support my team, who was also trying it for the first time.

In this series of articles, I will share the concerns that have arisen whilst implementing ET and some potential solutions to overcome them. This first article will address:

How to gain the confidence of the test team

After the first attempt at implementing ET, I attended James Bach’s Rapid Software Testing training course. I expected 5 days of training on Exploratory Testing. What I actually gained was so much more… 5 days of training on RST, of which ET is just a small, but essential part. James‘ course made me realise the wealth of underlying skills and techniques that anyone calling themselves a ‗tester‘ should strive to develop. It was these skills and understanding that my test team needed to develop, to be confident exploratory testers (not to mention better testers overall).

Isn’t Exploratory Testing just another name for Ad-hoc Testing?

This is often the first misconception that arises during the training courses I run. Of course, it could mean ad-hoc… to run ad-hoc tests, you would most likely be exploratory testing. However, we can still gain the benefits of ET (early testing, early effect detection, less script maintenance and rework), whilst placing sufficient structure around it to be confident of the coverage we will achieve.

More and more I use the term ‗Structured Exploratory Testing‘. ET can be structured because:

- We are not just banging a keyboard without thinking about our actions or the result.

- We do plan & communicate in advance what we are going to test.

- We do analyse and understand what we expect to see when we test.

But it is still exploratory testing because:

- We have not planned the exact steps we will take to perform a specific test.

- The tester has the freedom to choose the next best test to perform based on what they learned from their analysis, plus what they learn about the system whilst testing

- The test is repeatable but will not be performed in exactly the same way each time it is run.

How can a test be repeatable, if it cannot be run the same way each time?

This is another concern often raised by testers. Some in the testing industry have become so ingrained that a test must be performed exactly the same way each time (perhaps by companies selling very expensive automation tools, or very ‗robotic‘ testers?) that they miss the value in running the same test in a slightly different way. Think… when you use MS word, do you use it exactly the same way each time? Sometimes I use the mouse or sometimes a keyboard shortcut to achieve the same action. If one user performs actions differently, imagine the variance when a system has multiple users! Perhaps randomness in test data is important… or the number of times you click on a button, as a user may do in frustration when the application appears to be frozen (which often causes a complete system crash.. try it!). Any of these variances could give you important, new information about the product you are testing… but would it be possible, valuable or ethical to spend your company time writing a detailed test case for each of these scenarios?

When we repeatedly run the same test, we simply confirm what we already know. Would it add more value to learn something new about your application? As long as you test the core functionality, it is often cheap, yet informative, to change the specifics of how you test. And if it really is critical that the test is performed precisely… then congratulations, you have identified one of those rare occasions where Exploratory Testing would not be suitable… so write a script!

So, let me define Exploratory Testing! When using the term ‗ET‘ I mean ‗simultaneous (and cyclical) learning, test design and test execution’. With this definition in mind…

How can testers be sure not to miss anything important when testing, if no scripts are written beforehand?

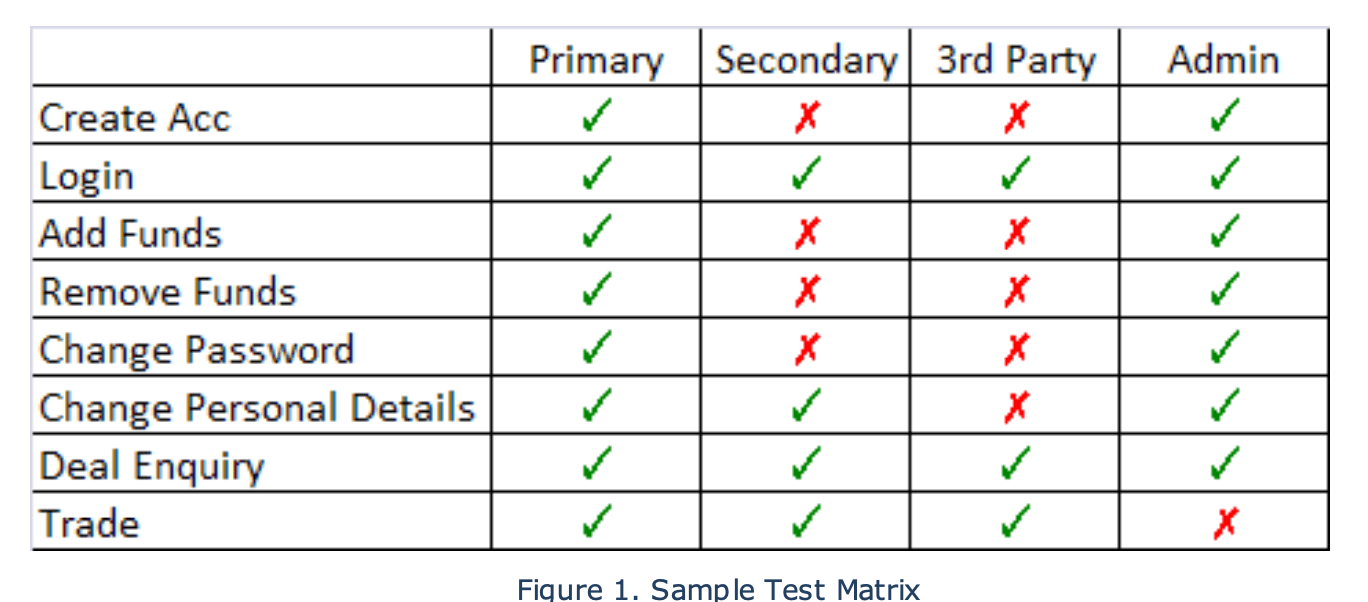

Firstly, I want to clarify that ET does not mean ‗No documentation‘. We created documents known as ‗charters‘ or high-level test scenarios. We backed these up with lightweight but informative test matrices (See figure 1). As a manager these were sufficient assurance that my team was achieving the coverage required during testing. I had spoken with them and trusted they understood what and how to test, without writing it down first.

What surprised me was that some of my team struggled with the absence of the test script creation phase. Personally, I found writing test scripts a waste of time, as the steps are rarely followed to the letter during execution anyway. I have since realised that for a lot of testers, writing scripts is the method they use to develop their understanding of the system, and therefore design their tests and the expected results. These testers were not comfortable letting go of a process they‘d used for years, without having a new one to replace it. This removal of the structure is a big cause of worry in some testers. They fear they may miss testing something important if they haven‘t written down their test ideas or expected results in detail first.

The analysis is the key…

I have since understood that time not spent writing detailed test scripts is better spent actively learning about the system. In other words, performing test analysis. Learning about the risks, constraints and potential test coverage to develop a more informed and targeted test approach.

…Analysis and Communication!

But the critical factor that ensures success… is communication!! I now enable my testers to be confident they will test the right things by giving them the time to talk with others and develop their test ideas. Sounds like it takes too much time? Without exception, teams doing this, in a structured way, have more quickly analysed the system and defined a valid test approach faster and more effectively than the teams who sit in silo‘s churning out test scripts.

We use a combination of brainstorming with Heuristic Checklists (google them!) and mind mapping to capture & present the results. This detailed analysis session (also known as a survey or intake session) provides clarity on the understanding of the system, and the ability to ratify that with other people. Once the system and risks are better understood, we can determine the coverage. The test design & execution approach is then discussed. And all this before anything is documented (although the documentation by way of test matrix or mind-maps is often created at the same time).

Proactive teamwork, not rework

This replaces the traditional Peer Review with a more proactive and motivating process which encourages continuous learning and removes the need for rework. This has proven more efficient than designing tests on a solitary basis and having those design ideas (scripts) reviewed afterwards. Some projects include their Development or BA in these sessions. Where this is not possible, all of them walk the Dev/ BA through the results of the brainstorming to get their opinion on the risks and test coverage. This improves relationships on the project by building trust, respect and knowledge throughout the project team. It‘s important to include your whole test team in this. What better way for your more junior team members to gain an understanding of the system?

junior team members to gain understanding of the system? Having gained this clarity of understanding about what ET means, plus had time to analyse the system instead of focusing on documenting it, our testers emerge confident they know what is important to test. Confident because they have been allowed to openly discuss and resolve their concerns and knowledge gaps. They have the confidence to proceed without creating detailed test scripts which, without fail, require future re-work and maintenance as the system inevitably changes.

In the next article, I‘ll share how we gain confidence from the project teams when adopting exploratory testing.

Leah Stockley

Head, Transformation Capabilities at Standard Chartered Bank. Leading and driving culture change across business and technology. Specialities: Culture Change & Transformation, People Development: Learning and Career Pathways, Agile Ways of Working, Human Centred Design, Quality Strategy & Management, Context-Driven Testing, Training, Coaching and Mentoring, Implementing Exploratory Testing in Regulated environments, Blogger and Conference speaker on above topics