In my experience, by far the biggest risk to the successful implementation of Exploratory Testing (ET) is the complete misunderstanding of what is meant by the term. In this series of articles, I share the concerns that have arisen whilst implementing ET and some potential solutions to overcome them.

In the first article, I addressed how to help the test team gain the confidence to Exploratory Test. Once teams understand that ET allows them to be better testers, the next concern raised is that their project team would never allow them to implement ET. This second article will address:

How to gain the confidence of your stakeholders

Firstly, by Stakeholders I mean ‗Project Managers, BA‘s, Developers, CEO‘s, Users … anyone who has an interest in the testing or can make a decision which affects it. And before I go on, let me share how not to do it. If you tell your stakeholders ―We‘re not going to write test scripts anymore. They take too much effort. Instead, we‘re going to exploratory test. I read about it on the Internet and it worked for Microsoft‖ they may actually hear ―We aren‘t going to produce any documentation.

You will no longer be able to track our progress. We‘re cutting corners and removing process. Just trust us‖. Unless you have a laidback stakeholder, this approach is unlikely to convince them that implementing ET is in their best interests.

Prepare for the discussion

Dale Carnegie gave essential advice for influencing stakeholders “Talk in terms of other peoples wants”. In other words, tell them why they would want you to use ET and how it will benefit them. In order to do this successfully, I start by asking myself the following questions:

- What do my stakeholders expect from the test team?

- What is important to my stakeholders?

- What might be their concerns when introducing ET?

“A Tester’s job is to produce test cases”

The common answer to question 1 is ―We expect the testing team to produce and execute test cases‖. Whilst this expectation may be true, we need to dive deeper and clarify the underlying intention behind the statement. In reality stakeholders ‗demand‘ test cases because that is how the industry has taught them to manage the test team. Our job is to help them understand what they actually need from us and re-educate them on how best to manage us so we can deliver to their expectations. When you drill down, the underlying expectations look more like ‗Prevent users from finding issues when the software is live‘ or ‗Help deliver better quality code to UAT‘. Production of test cases gives no guarantee of either of these. You may or may not have this exact conversation with your stakeholders, but it is important that, as testers, we understand our fundamental purpose on the project.

ET can be all things to all projects

Question 2 reminds me to consider what is important to my stakeholder. Often when we discuss ET we assume the benefit is saving money or time. I once based a presentation around that assumption. I got halfway through before hearing ―I don‘t care about saving time or money. I care about the quality of the product‖. Thankfully I also had a good case study to demonstrate how ET can address that too (a project that increased from 2 weeks to 6 months test effort, because the improved analysis required for successful ET meant they could communicate the impact of the proposed change and therefore clearly show the risks and the test effort required to mitigate it). In order to avoid this mistake, I now directly ask the stakeholders what is important to them.

Start off on the right foot

Question 3 is perhaps the most powerful. I put myself in my stakeholder‘s shoes (easier if I know them, but still possible if not. I find someone who does know them and can give some insight into their character/ work style). I then consider what is most likely to scare them and cause them to reject the proposal … knowing this, I can start the conversation with a focus on aspects they will identify and agree with. If they have a leaning towards Agile processes then I can focus on the efficiency of less documentation & the collaboration gains. But if they are more conservative and enforce rigid process & documentation I will discuss ‗a new structure‘ and give examples of the documentation we will produce. Ultimately I am describing the same approach to both, but tailored to the needs/ wants of the audience to show I can anticipate and address their concerns.

So how does this information help to gain Stakeholders confidence in ET?

I recently presented to a team who are notorious for adhering to strict processes. I knew that starting the conversation with a focus on less documentation would immediately alienate them. Instead I began by explaining why it was important to change our existing process (because it was preventing us from delivering to their project‘s expectations of timescales & quality). I said I would like to suggest a ‗New Structure‘ that would allow us to deliver successfully. Once I had an agreement on the reason behind the change and confirmed I was refining the structure, not taking it away, the stakeholders were very open to listening to my ideas. I then used reassuring terms such as SET ―Structured Exploratory Testing‖ (See part 1 of this series in last month‘s TTWT) and showed how we would map test coverage and agree on priorities with them using Visual Test Models (see my recent blog post http://lnkd.in/hxhwpm).

I explained how we walk stakeholders through high-level test scenarios, how we provide auditable test evidence by taking detailed notes as we test. I showed an example of how we report progress & quality. I showed how their concerns would be addressed (test coverage, planning, audit requirements & progress reporting) in a positive structured way before they even raised them.

Explain the benefits for them, not for you

From experience, I can guarantee if I started the presentation by saying we want to use exploratory testing and would no longer write test scripts, I would have been on the back foot and defending my position from the start of the meeting. Instead, I gained the project‘s agreement because I helped them understand how SET would benefit them. If I had told them we want to use ET because the traditional way wastes time creating scripts that never get followed to the letter, and causes us much maintenance/ rework… that audience honestly wouldn‘t have cared because that wasn‘t frustration or concern they could relate to.

Reporting test progress to Stakeholders without test cases

For many years now, the test case pass/fail/no-run count has been the de-facto measure of completion of testing. We are conditioned to produce the numbers and the stakeholders are used to looking at them to interpret our progress. So how can we replace this familiar structure?

Start by asking yourself ‘What information am I trying to ascertain from a test case count?‘ In the majority of cases, it would be.

- Are we on schedule? (Total executed vs. total planned)

- How important is the feature? (More test cases = more effort = more importance)

- What‘s the quality of the feature? (How many have passed/ failed)

Now ask yourself whether one number can accurately represent all of those pieces of information? Is there a potential for that number to mislead people? Have you ever had to placate the project because on day 2 of a 10-day project you are not 20% of the way through your test cases? I have on many occasions. As testers, we understand that the first few days will be slower due to environment troubleshooting or the discovery of more defects. Wouldn‘t it be good if we could prevent those phone calls that start by defending ourselves, that waste everyone‘s time? To report in a way that makes it very clear when the reader should or should not be concerned.

Fact-based test reporting

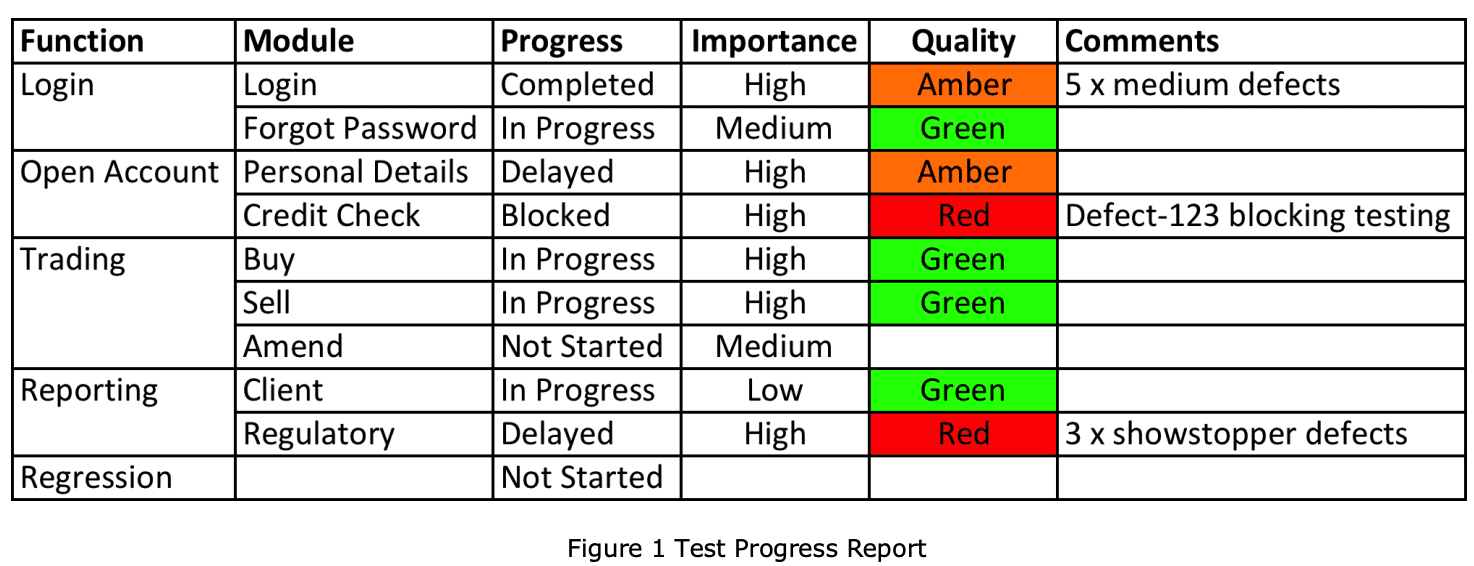

These thoughts inspired me to redesign our standard test progress report. Figure 1 shows an excerpt from it. We now provide explicit information where previously we used numbers. We walk stakeholders through the new format before we start using it and agree on the definitions of Importance/Quality with them.

We actually considered (at length) the inclusion of a %complete column to appease those who like numbers. But realised if asked to explain how we derived that percentage we would be unable to (with or without test cases, as not all test cases are equal). We considered developing a sophisticated risk & complexity weighted measure to more accurately producing the number, but agreed the effort involved to make that valid and reliable would far outweigh the benefit of including the number in the report.

How we report testing & quality is a conversation we should be having with our stakeholders, regardless of whether we write test cases. We can unintentionally mislead them by relying on numbers and graphs to tell the story. So we took the decision to roll this report format out to all projects, even those who still use test scripts. Overwhelmingly the feedback has been positive because they now receive information that clearly shows where the problems are.

Removing the Blockers for ET

Understanding how to report progress is a major blocker to some projects implementing ET. Having removed a reliance on test case counts from reports, stakeholders more readily understand how testing can add value without test cases. It allows them to focus on the important information the test team provides… clear information about the status of risks within the product.

This makes future conversations about Exploratory Testing much easier because the underlying reason for the change is the same ―We focus on providing you important information on the quality of the software we are testing, not the quality of our testing process.

Understand why you want to make changes

Some people thrive on change, while others run away from it. The remainder might follow when someone else has proven it will work. Fundamentally, when considering implementing a change, especially to an established‘ process (i.e. test scripting, test reporting) I consider the questions:

What is the underlying purpose of the current process? (When I‘ve answered I dig deeper by repeatedly asking myself ―And what does that give me?‖)

What are the problems/frustrations with the current process?

With these questions answered, I am better able to design a suitable process and understand how to articulate the need for change to others. When combined with the 3 questions above, I‘m also able to explain the benefits in their language. By focusing the conversation directly on the Stakeholders needs we are better able to anticipate their perceived risks, alleviate concerns and demonstrate the benefits of implementing Structured Exploratory Testing.

Having addressed the concerns which arise both from the tester and the stakeholder‘s point of view, the next article will focus on addressing the concerns of test managers themselves when starting to use Exploratory Testing.

Leah Stockley

Head, Transformation Capabilities at Standard Chartered Bank Leading and driving culture change across business and technology. Specialities: Culture Change & Transformation, People Development: Learning and Career Pathways, Agile Ways of Working, Human Centred Design, Quality Strategy & Management, Context-Driven Testing, Training, Coaching and Mentoring, Implementing Exploratory Testing in Regulated environments, Blogger and Conference speaker on above topics